Check out sessions covering the latest hardware, software, and services from NVIDIA and our partner ecosystem showcased at GTC—on your own schedule. See how technology breakthroughs are transforming generative AI, healthcare, industrial digitalization, robotics, and more.

Brilliant Minds. Breakthrough Discoveries.

Explore the 2024 GTC AI conference.

Live From GTC Featured Episodes

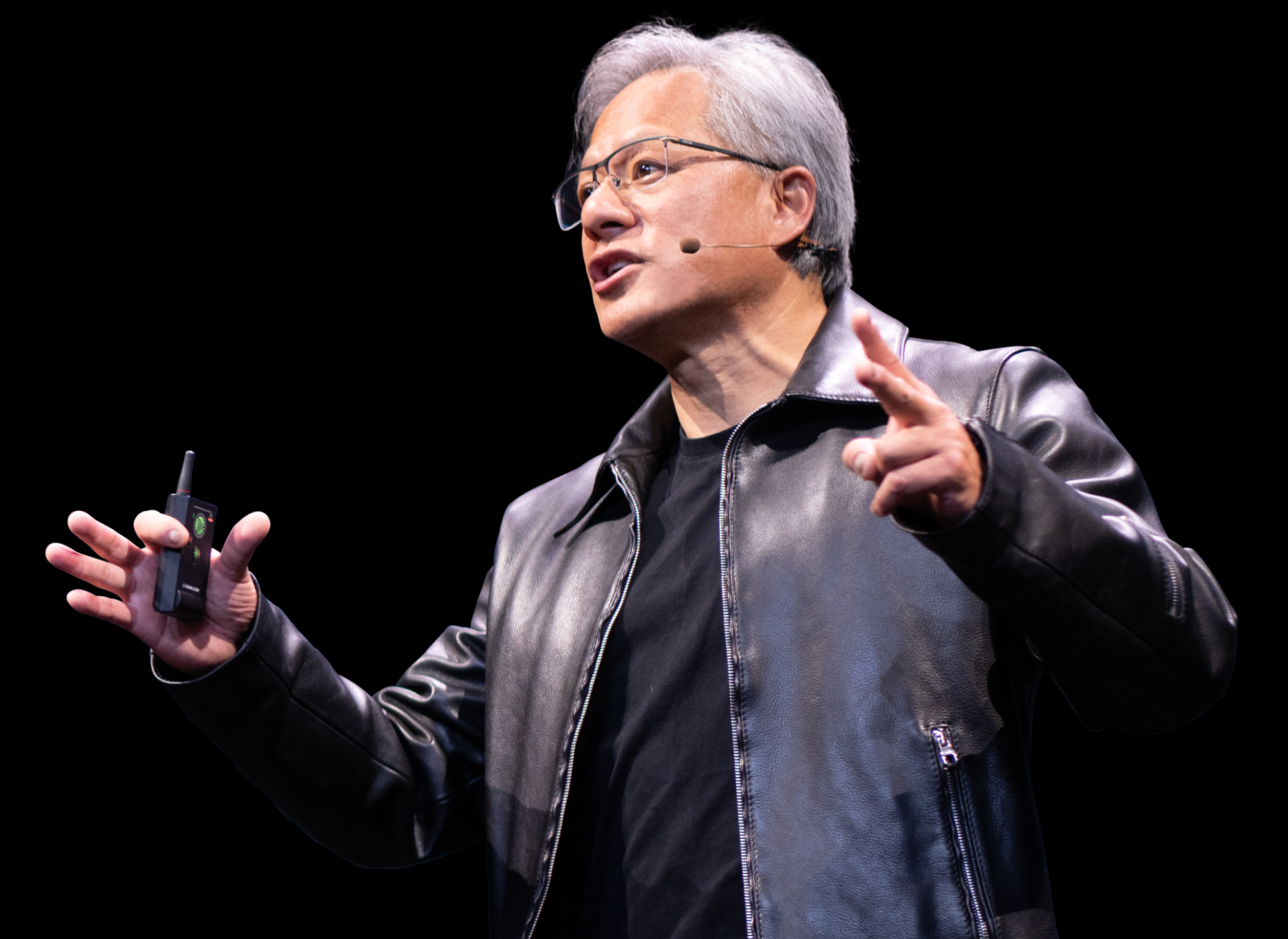

Jensen Huang | Founder and CEO | NVIDIA

Don’t Miss This Transformative Moment in AI

Watch Jensen Huang’s keynote as he shares AI advances that are shaping our future.

Check Out Sessions Chosen Just for You

Check Out These Groundbreaking Panels

Hear Big Ideas From Global Thought Leaders

Diamond Elite Sponsors

Diamond Sponsors

Learn, Connect, and Be Inspired

- DLI

- developer

- stsrtup

See What Attendees Say About GTC

Stay on top of technology advancements and discover expert insights—on your schedule.